Innovative Software Solution Architects

Do you have an idea but are struggling to conceptualize the finished result?

Do you want to create a world-class modern software product that can change the world, but you’re not sure where to start and the path isn’t clear?

We will partner with you to help make sense of the technology landscape. No project is ever the same; there is no “one size fits all.” Working together, we’ll understand your needs and build a custom-tailored solution that uniquely solves your problem.

The finished product is more than a system—it is a robust, living, scalable software asset which will continue to evolve and add value to your business in the months and years to come.

Services

Our thoughtful and in-depth approach emphasizes quality and functional excellence for the finished software product. Take a look at some of our services below.

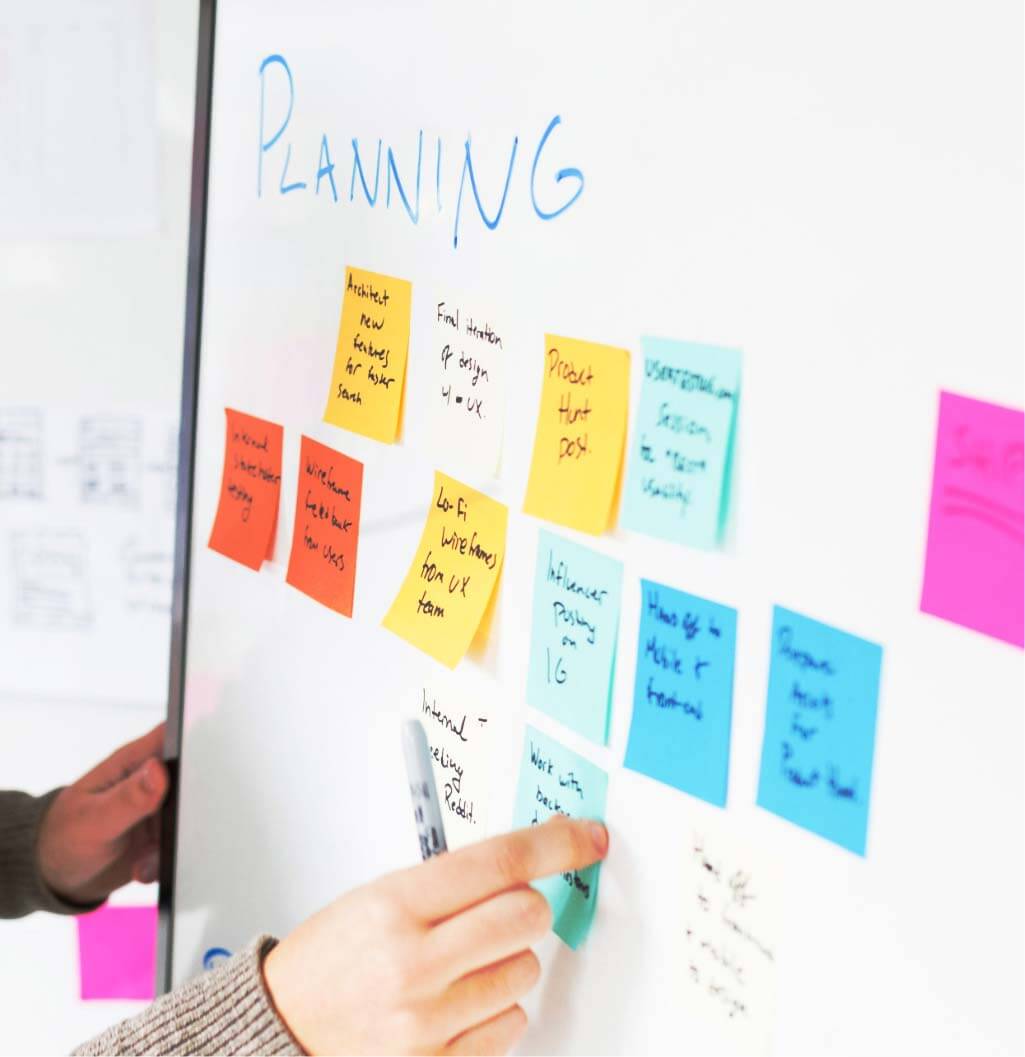

Technology Roadmap

Creation of a technology strategy roadmap to go from conceptualization to realization for your idea and your business needs.

Software as a Service

Construction of modern Software-as-a-Service progressive web applications, hosted in the Microsoft Azure cloud.

Training & Coaching

Training and coaching of software teams in industry best practices and productivity-enhancement techniques.

We Also Offer..

- Coaching in technical writing skills.

- Individualized career path and personal development mentoring for technology professionals.

Our Approach

With a focus on the discovery process and high level of involvement with the client, we build quality solutions that are maintainable and solve your problems.

- Initial consultation and evaluation of business needs.

- Client questionnaire.

- Exploration phase.

- Initial design phase.

- Execution phase.

- Evaluation/review phase.

Ready to chat about your next project? Interested in our services?

Inquire Now

About Us

We bring to the table decades of professional expertise in enterprise software engineering. We’ve built countless systems from scratch, leading the project from idea to execution. Our thoughtful, academic approach yields results that are clean, maintainable, robust, and scalable.

Let’s Chat!

For questions about the services we provide or to set up a free consultation, please reach out using the contact form below and we will respond in 1-2 business days.

Your idea is waiting to be brought to life.

Don’t hesitate! Reach out now and let’s make your ambitions a reality.

Let’s Talk!